Data Center Deep Dive: Insights on Transformative Role of Nvidia’s Blackwell Chips

2024.10.31 11:42

As Nvidia (NASDAQ:) stock price was approaching new highs during the summer, we dove deep into the Blackwell order book to understand if the stock price move was justified and if there is further upside ahead.

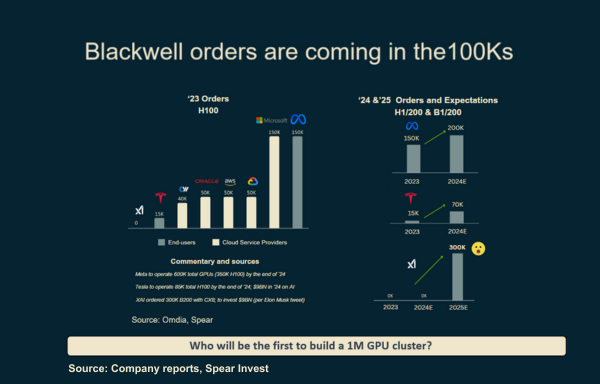

We were surprised to find that the order book was even stronger than we had modeled and a step change from the previous generation chips (H100 Hopper). Orders for Hopper were averaging in the 10-30Ks in ’23 when it was introduced, vs. orders for Blackwell were coming in the 100Ks.

Over the past two months, there has been a myriad of data points confirming our view. However, we also learned something incremental: it now appears that the mix of orders will be much more heavily weighted towards the GB200 NVL72 configuration, which carries significantly higher margins.

Some background…

- Blackwell is Nvidia’s next-gen chip going into scale production this quarter. Many investors were concerned about pushouts. But instead, production is on track, and demand is off the charts.

- Blackwell comes in a single configuration (contains two GPUs), which can go into existing data centers, or in large configurations like the GB200 NVL72, which packs 72 Blackwell chips (equivalent to 144 H100s).

- One of these “chips” sells for ~$3M and contains ~2 miles of cables costing $100K just in cabling alone.

What we learned…

- Earlier this month, Foxconn’s CEO said that demand for Blackwell is “crazy” – adding a new facility in Mexico to satisfy Blackwell orders.

- These comments came right after Nvidia’s CEO, Jensen Huang, stated that production is on track and demand for Blackwell is “insane.”

- In addition, an important event took place two weeks ago — the Open Compute Project (OCP)— where data center companies showcased their latest and greatest products. The key takeaway from this event was that everyone wants the GB200 NVL72. Positive for content, margins, and the rest of the value chain.

- Microsoft (NASDAQ:) announced that Azure is the first cloud service to run the Blackwell system GB200 and reportedly increased its orders from 300–500 racks (mainly NVL36) to about 1,400–1,500 racks (about 70% NVL72), 5x increase. Subsequent Microsoft orders will primarily focus on NVL72. While this is unconfirmed, it is in-line with what we heard at OCP.

- On the volume side, TSMC (NYSE:) just reported earnings and implied that they are doubling their packaging capacity from 40K/month to 80-100K/month exiting ’25. In terms of GPU capacity, this implies that Blackwell should be exiting the year at 2M units/quarter.

- And here is the best part, according to comments from the CEO, Blackwell is now “sold out”!

This is great news for Nvidia but also very positive for the rest of the value chain, including networking plays: connectors, cables, switches, interconnects, etc.

Nvidia, the GB200 NVL 72 is important because it is margin accretive as it contains many Nvidia networking products).

While Hopper (the prior generation GPU) took Nvidia ‘s Data Center revenues from $20B to >$100B, Blackwell has the potential to take it above $200B.

Where is the incremental demand coming from?

Here is another key milestone that happened over the past few weeks that is very well-known in the tech/AI world but under-appreciated by investors.

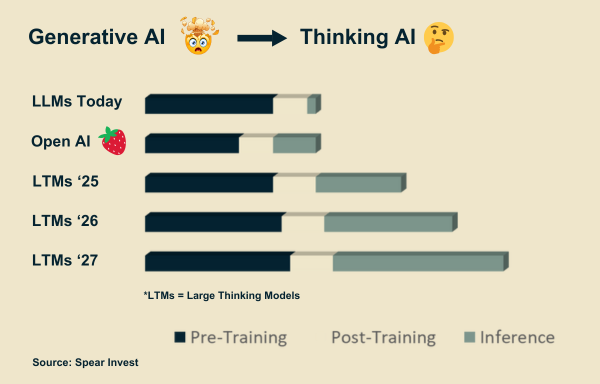

OpenAI’ introduced a different type of model o1 (a.k.a.) which is parallel to GPT-4 but fundamentally distinct. The o1 is important because it is not just a one-off model but a new paradigm for training models.

The key difference between GPT-4 and o1 is that the latter can reason. The model spends a few minutes thinking before generating an answer. To do this, an additional step of Inference Compute is required, which will increases the demand for compute significantly.

Current AI models are trained on a data set and infer or generate conclusions based on that data set. While this works well for many applications e.g., search, the models hit a plateau and don’t improve. The only way to progress is to train a new generation of models.

In the future, as compute costs decline, companies will start introducing models that can reason and continuously learn. The applications for these will be anything that requires real-time response and accurate action. The o1 model is just one step in that direction. Continuous learning would take thinking to the next level.

Nvidia’s CEO, Jensen Huang, highlighted this paradigm shift in a keynote at Stanford this summer: “Today, we learn and apply (train => inference); in the future, we will have continuous learning.”

Note that inference today is a large market (we estimate >50%), but Inference Compute is a whole new use case.

Lay of the Data Center Land

In our recent webinar, we explored the evolving landscape of the AI data center market. For those who missed it, here’s a concise overview of our findings.

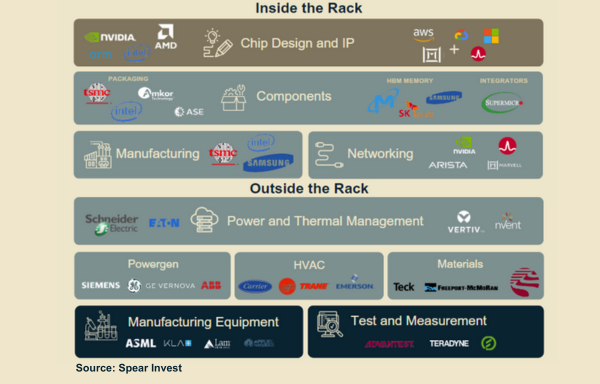

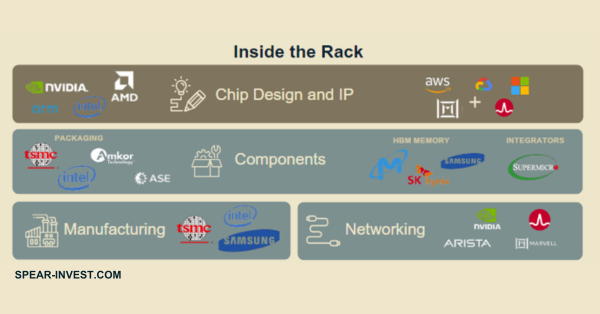

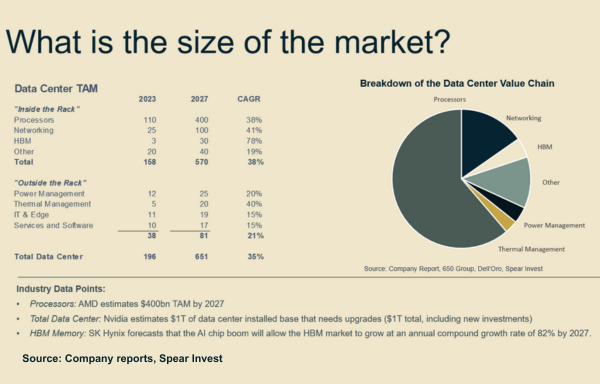

The AI data center value chain is split into two parts: inside the rack and outside the rack. Processors, Networking, and Memory are key areas inside the rack. Thermal Management, Power Management, and Manufacturing Equipment are key areas outside of the rack.

Market Overview and Growth

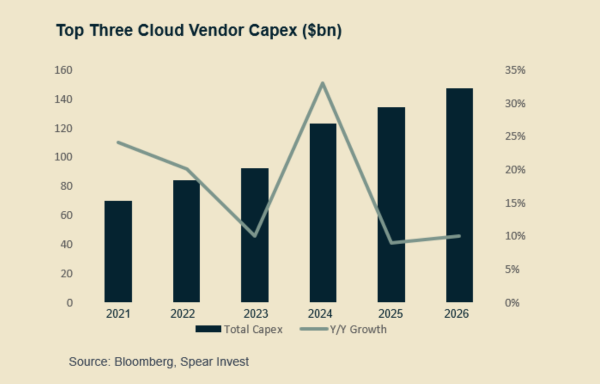

The key driver for Data Center investment has been capex spending by the Cloud Service Providers (CSPs). Capex growth surprised to the upside this year and we expect it to surprise again next year as companies are starting to provide qualitative commentary this quarter.

In addition to the cloud service providers, enterprises and governments are now coming to the market with incremental demand. As an example, xAI’s data center cluster, Colossus (100K H100 GPU cluster), just came online and is expected to double in size in a few months! Moreover, per Elon Musk’s comment, xAI has 300 B200s on order for 2025

This is just one example. Every other model provider will have to follow if they want to stay competitive.

Who stands to benefit? Let’s dive into the value chain.

Processors

Processors are the largest market segment, representing >60%% of the total market, and are expected to grow at a 40% CAGR from 2023 to 2027. There are companies that design the chips (e.g., Nvidia, AMD (NASDAQ:)), which is an asset-light business model, and companies that manufacture them (e.g., TSMC), which is an asset-heavy business model.

Nvidia is the leader on the chip design side with over 80% market share, but we expect, over time, both custom chips (i.e., chips developed by the hyperscalers) and AMD to be able to capture market share.

What is driving Nvidia’s advantage?

The main point that people don’t appreciate is that software is not automatically accelerated with a GPU, and accelerated computing requires algorithms to be re-designed in order to be able to use a GPU effectively. This is where NVIDIA differentiates itself with over 400 CUDA libraries, which deliver dramatically higher performance than alternatives.

Consequently, although at face value, Nvidia’s and AMD’s chips look comparable, most customers are often time unable to get similar utilization out of the AMD or custom chips. There is a subset of more sophisticated customers that build their own software and can get an even more attractive total cost of ownership (TCO) from competitors compared to using Nvidia’s chips, but they have to do the heavy lifting.

Examples are established tech companies like Meta (NASDAQ:) & Microsoft, which are AMD’s customers, and Databricks, an AWS custom chips customer.

In addition, with Nvidia’s product innovation cycle shortened to one year, it is challenging for competitors to keep up with the development timeline. At the current utilization that customers are getting, AMD is considered to be a year behind Nvidia.

Despite Nvidia’s product being superior, there is room for competitors due to capacity constraints and supply security. Some thoughts on the competitive landscape:

- On the custom side (ASICs) companies like Broadcom (NASDAQ:) and Marvell (NASDAQ:) work with major Cloud Service Providers (CSPs) to design chips in-house.

- Broadcom’s CEO recently stated that over time, he expects that most of the CSP market will be custom chips. For background, CSPs generally represent 50% of the market, with Enterprise the other 50%.

- We estimate that custom chips will take half of the CSP market (1/4 of the total) as, in many cases, customers specifically ask for an Nvidia chip if they plan to leverage the above-mentioned CUDA libraries.

- Another formidable competitor is AMD. The company is particularly well positioned when Nvidia is out of capacity, as its chips are comparable in performance but require extra work. We expect that AMD will be able to capture ~MSD market share in the near term, and ~ 10% in the medium term is meaningful ($500B ’28 TAM vs. $4.5B ’24 revenue base).

Networking

Networking is one of the areas with the highest growth potential and solid business models. Networking is expected to be a key driver behind achieving performance improvement for each generation of GPUs.

The key to growth in networking is that growth is multiples of the growth in the compute units, as they all need to be connected to each other.

Here are some highlights.

We expect this market to reach $100B by 2027. Key components of the networking market are:

- Switches (Ethernet, InfiniBand) with key players Nvidia, Broadcom and Arista (NYSE:).

- Interconnects with key players Broadcom and Marvell.

- Cables & Connectors with key players Amphenol (NYSE:) and TE Connectivity (NYSE:).

While there are many specialized networking products, there are relatively few players competing in each specific technology and are therefore able to capture high margins.

Memory

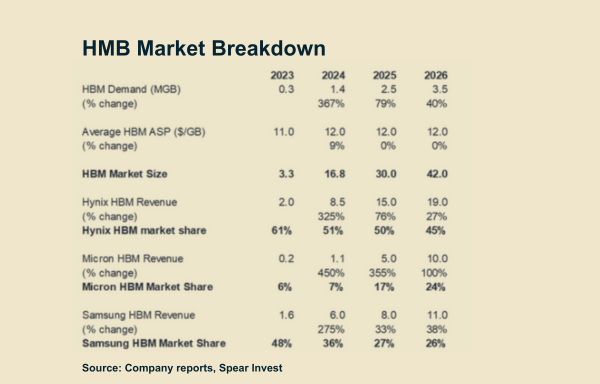

Memory has historically been a more challenging and cyclical market due to competitive dynamics. AI requires High Bandwidth (NASDAQ:) Memory, which is more complex to make, but the question is whether this time will be different from a competitive standpoint. The silver lining is that HBM takes up capacity from traditional Memory, tightening the market.

Key players in the memory market are SK Hynix, Micron (NASDAQ:) and Samsung (KS:). SK has a leadership position in HBM memory but both Micron and Samsung are scaling their capacity in ’25.

Thermal and Power Management

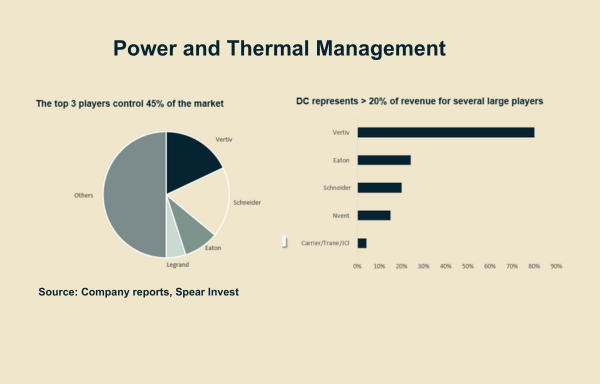

Power Management is an important area both outside of the rack and as it extends to the grid. It is a relatively consolidated market with companies like Eaton (NYSE:), Schneider, commanding significant market share.

Due to the increased focus on thermal management, liquid cooling has emerged as a very attractive market.

Equipment Providers

Further back in the value chain, there are the Semi-Equipment providers and Test and Measurement providers. People often miss that these sub-segments follow their own cycle (different from AI GPUs’ cycle).

For example, manufacturing equipment had a strong capex cycle (21-23), and now customers are digesting the previously added capacity. In many cases, like in memory, the capacity for traditional products can be converted to make AI products, which, given the muted economy, does not result in more limited incremental demand. Even TSMC, a company that is leading the way in manufacturing, sees a muted capex cycle and guided to the lower end of its prior capex guidance.

As we get further into the AI opportunity and the $500B near-term opportunity, there will be a need for incremental manufacturing capacity, which will be positive for the equipment providers. But we are not there just yet.

Quantifying the AI Impact on Electricity Demand

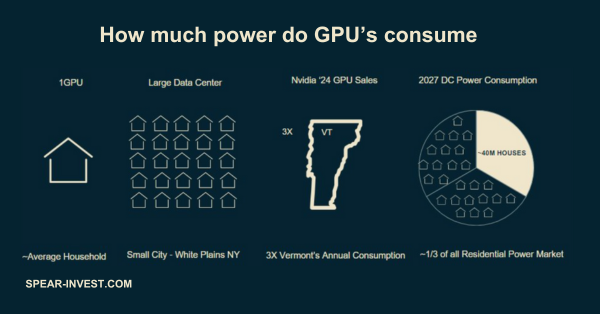

One of the most significant medium-term bottlenecks for the Data Center build-out is power availability. One large data center consumes the equivalent of a small city and by ’27 we expect that Data Centers will consume the equivalent of 40 million houses or 1/3 of the US residential power market by 2027.

We are projecting that data centers will increase their share of power demand from approximately 3% in 2023 to over 10% by 2027.